Contributions to FreeBSD

My first encounter with the FreeBSD operating system was when I was a tiny kid: a computer my dad brought home from a server environment had it on the hard drive. I haven’t done much with it then and quickly installed Windows, of course. Some time later I became a Linux user, then I got a Mac mini to run macOS on, but after some years in the Apple world I was looking for more freedom again and… inexplicably landed back on FreeBSD.

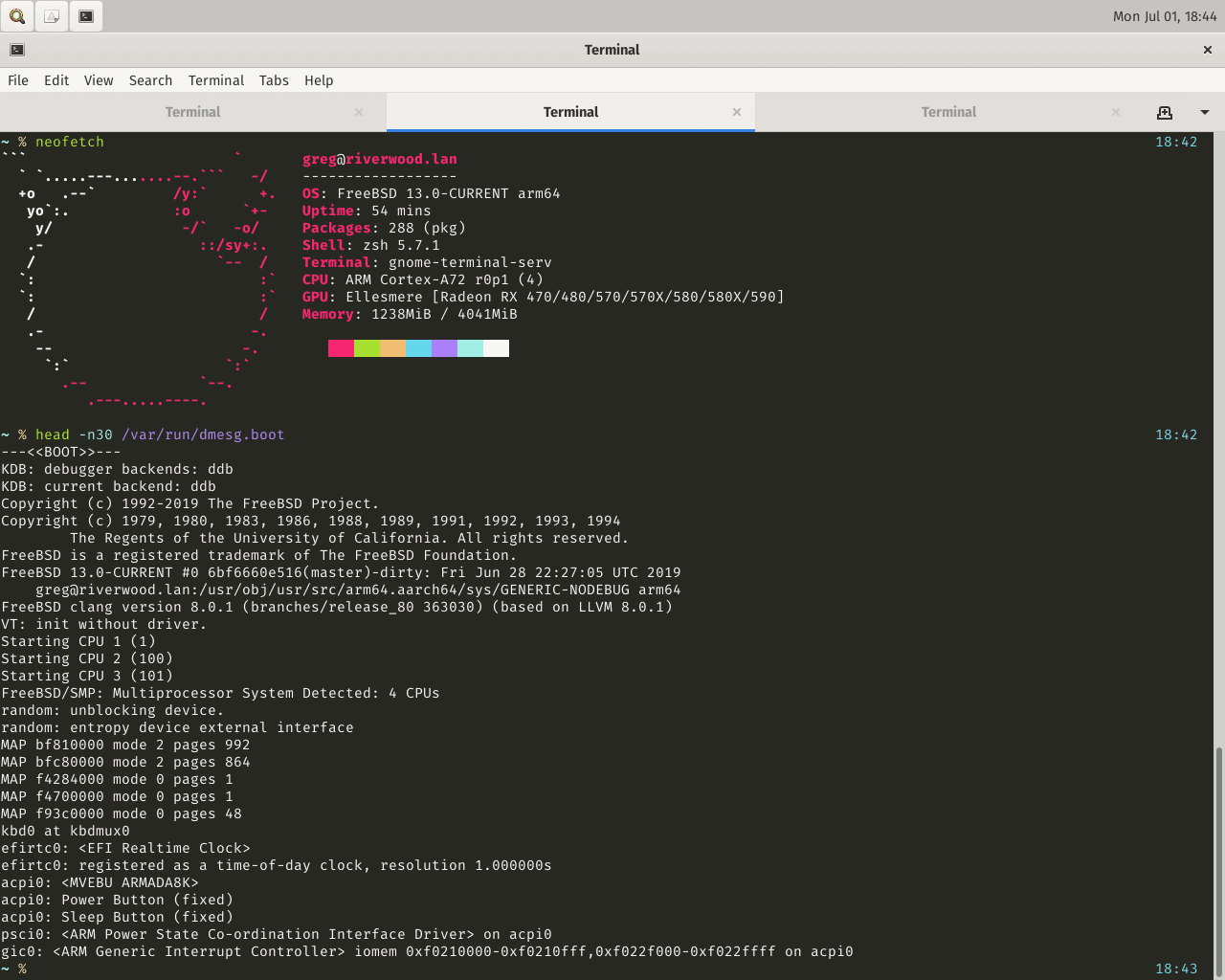

Me being me plus non-mainstream desktop platforms being non-mainstream, of course the daily usage was intertwined with code contributions — both to the FreeBSD project itself and to a bunch of other projects (including Firefox and Wayland) to improve FreeBSD support upstream. And because only the OS being non-mainstream is not enough, my interest in the Arm architecture has resulted in me bringing up the first usable FreeBSD/aarch64 desktop with an AMD GPU.

The graphics stack

The port of the DRM/KMS GPU driver ecosystem from Linux to FreeBSD has quite a history. Originally it was done with manual modification of everything to use FreeBSD kernel API everywhere, which was becoming really unmaintainable: for a long time Sandy/Ivy Bridge was the last supported Intel generation, and when I bought a Haswell laptop in late 2015, it was the final upgrade of that port (to Linux version 3.8) that I used there at first. After that, it was replaced by a port using LinuxKPI, a compatibility layer that translates Linux kernel APIs to FreeBSD ones.

My first contribution there was fixing 32-bit app compatibility back in 2017.

Around that time I also added more stuff to linsysfs to make graphics work in Linux apps.

In 2018-19 I worked on monitor hotplug notifications for Wayland compositors,

early kernel-based backlight support (via sysctl, way before the backlight tool), and 64-bit Arm support.

In 2020 I landed another Linux app compatibility fix, now for PRIME ioctls.

2021 was quite a fun year, with me adding a PCI info ioctl like on other BSDs,

fighting amdgpu’s FPU usage in the kernel to make AMD Navi GPUs work

(took a couple tries to figure out the right way to implement FPU sections in D29921),

and finally solving the baffling “AMD GPU vs EFI framebuffer conflict” that’s been annoying users for a few years

(turns out FreeBSD’s basic framebuffer console continuing to write to VRAM as the driver was loading could sometimes just result in corrupting the memory that was used for the initialization,

and “sometimes” was indeed influenced by how large the current monitor was).

In 2022 I made AMD RDNA2 “Big Navi” GPUs work by backporting patches — again FPU related ones.

On the userspace side, I have contributed FreeBSD-related (and general portability related) patches to upstream projects like Mesa.

The input stack

Originally, input drivers on FreeBSD exposed their own custom interfaces.

Around 2017, work was happening to adopt the evdev protocol from Linux, which was great news for the ability to run Wayland compositors.

Before that however I did mess around with xf86-input-synaptics a bit, fixing TrackPoint and ClickPad support on FreeBSD.

After that, I’ve been doing a lot of tech support on the mailing lists related to enabling evdev, until it was made default :)

I’ve also contributed various improvements like adding device info sysctls (D18694) and using them in libudev-devd

to make it possible for compositors to enumerate devices without having file permissions on them (which was expected because on Linux enumeration happens via actual udev),

fixing joystick enumeration in libudev-devd and so on.

Also, since the beginning of USB support, HID support has been very… limited.

FreeBSD only had specific drivers like ukbd (USB Keyboard) and ums (USB Mouse) which took control of a whole USB interface, only supporting one function no matter what else was exposed in the HID descriptor.

When laptops with HID-over-I²C started appearing, the need for a proper HID stack became apparent, and the iichid project by wulf@ came to the rescue, bringing a proper HID abstraction to the table.

I have contributed a few fixes and a couple drivers: hpen for tablet pens, hgame/xb360gp for gamepads.

Getting a Google Pixelbook was the driving factor for a bunch of these contributions, as well as the convertible lid switch driver and keyboard backlight driver, and some non-input things like a driver for an I²C transport for the TPM.

I have also worked on Apple trackpad support:

- Magic Trackpad 1 via bthidd, don’t remember why it wasn’t upstreamed by now…

- Magic Trackpad 2 over USB

- Apple HID-over-SPI transport (the

atopcase(4)driver) enabling the 2015-2018 MacBooks to have keyboard and trackpad input

Porting various things to FreeBSD

Over the years I have contributed FreeBSD-related improvements and fixes to a variety of upstream projects, like the Firefox browser and Wayland ecosystem projects, as well as many others including:

- PulseAudio sound server (upstreaming existing downstream fixes, adding FreeBSD to the Meson build files, adding a devd hotplug backend)

- Heaptrack memory profiler

- Lucet WebAssembly compiler

- ISPC SPMD program compiler

- LDC, OCaml, Mono, libunwind, libffi, zlib-ng (64-bit Arm support)

- Rust

libc, Rustnix, a bunch of smaller crates…

I have also maintained a bunch of packaging recipes in the FreeBSD Ports Collection.

In order to make more software run properly on FreeBSD, I also contributed some kernel changes:

- allowing

flockto be called on FIFOs (D24255) because the aforementioned Heaptrack does that - exposing the

eventfdimplementation, originally only intended for the Linux syscall compat layer, in the native FreeBSD ABI (D26668) because Firefox started passingeventfds across processes while setting up hardware video decoding, so a kernel-level implementation was necessary - allowing real-time priority to be set on any process’s threads (D27158) to make it possible for RealtimeKit to do what it needs to do

- allowing extended attribute manipulation syscalls to work on

O_PATHfile descriptors (D32438) to make that part of xdg-desktop-portal’s document portal work

Arm platform bring-up stories

Some of my most interesting FreeBSD work was related to making (more of) the OS work on Arm-based systems. Over time, it became more and more involved, getting deeper into various kernel and firmware complexities, and it has paid off: being the first person to have a working FreeBSD/aarch64 desktop system with a fully working AMD GPU felt immensely satisfying.

Scaleway ThunderX VPS

My first bring-up story from back in 2018 does not yet involve touching kernel code, as the hardware platform was already well supported. The original Cavium (now Marvell) ThunderX was the first production 64-bit Arm (aarch64) server unit, FreeBSD has actually been using those for the build farm back then.

When European cloud provider Scaleway had introduced their ThunderX-based VPS product, it didn’t officially support local boot, so installing custom operating systems was not supported. However, I went ahead to experiment with it and see if I could “depenguinate” a running VPS.

Turns out, yes! This wasn’t too hard and I’ve published my results on the now-defunct (hence the Wayback Machine link)

Scaleway community forum.

Most of the effort was on the Linux side, with carefully copying necessary parts of the running system

to a RAM disk, doing pivot_root, and putting the FreeBSD installer onto a disk partition.

Then — luckily — it was possible to select the boot device by pressing Esc on the remote serial console.

The only non-trivial thing on the FreeBSD side was figuring out why storage was awfully slow:

turns out the system was considering the PCIe controller to have broken MSI support and used legacy interrupts,

and it was necessary to set hw.pci.honor_msi_blacklist="0".

After publishing the thread I have even received an email from someone at Scaleway, saying they’d buy me a beer if I ever was in their city :) However a few months later local boot became impossible, despite the website claiming “It will become available for our ARM64 virtual cloud server in a few days”. And then… the Scaleway ARM64 offering was sadly discontinued.

Pine64 ROCKPro64 (Rockchip RK3399)

I was the first person to boot FreeBSD on the ROCKPro64 SBC. As an embedded, Device Tree based platform, there was no smart firmware to handle everything in early boot, so the initial bringup involved things like SoC clock and pin control (D16732).

I have gotten Ethernet, XHCI USB (D19335) and SD card (D19336) peripherals running, however those experimental patches were completely rewritten by committers (mostly manu@) later. This work involved more code than the ACPI-based things described below, but far less interesting debugging.

AWS EC2 Graviton (a1)

In late 2018, Amazon Web Services launched their first Arm VM instances running on their own SoC called Graviton. Naturally the FreeBSD community was quite interested in supporting it. The environment being a SBSA-standard UEFI-ACPI one, all operating systems should “just work”. However, bugs :)

After adding arm64 support to the EC2 image build tool and

booting a VM, I was greeted with… nothing on the serial console after the UEFI loader’s output.

Which is very “fun” to debug on a completely remote machine.

Patch D19507 is where my UART adventures are documented.

Basically, the problem was that the AccessWidth property in the SPCR ACPI table was not used, resulting in

FreeBSD using wrong memory accesses for the EC2 virtual UART.

Oh, and the direct manual configuration via hw.uart.console that I was using to first just match all the serial console

settings to Linux manually was broken on aarch64 too.

Other than the serial console, there actually weren’t any problems in FreeBSD and the VMs just worked and I have posted an early dmesg log on 2019-03-09. From there, it was Colin’s work to polish everything (especially image build tool) and make everything official. Now Arm EC2 images are officially published by the FreeBSD project among with the amd64 ones.

Ampere eMAG

Soon after the Graviton, bare-metal Arm boxes became available from Packet.com (now Equinix Metal), powered by new Ampere eMAG CPUs. I was first to boot FreeBSD to multi-user on one of these (again starting with a depenguination), starting the Bugzilla thread that was later joined by Ampere engineers.

Bring-up on a completely remote system is always “fun”, especially when you start with — once again — the serial console not even working.

However, having had the experience from the Graviton, I knew where to look.

In fact, it was my patch for the Graviton that made it not work on these eMAG servers…

because they had a wrong AccessWidth value reported by their firmware.

We have added a quirk for always using the correct value for that kind of UART (D19507),

and the Ampere employees have fixed later revisions of the firmware as well.

With help from Ampere, missing address translations were added to make PCIe work.

In D19987 I’ve enabled ioremap in the LinuxKPI driver translation layer

to make the Mellanox NIC driver work, getting me to networking on those remote servers which allowed me to post a dmesg.

I’ve also landed D19986 adding support for XHCI (USB 3.0) controllers

described in ACPI (not discovered over PCIe) as the eMAG was the first platform where we’ve encountered that.

Other people have handled various other tricky issues related to EFI memory maps and such, and in the end the platform worked very well.

SolidRun MACCHIATObin (Marvell Armada A8040)

Having a fully working Arm desktop machine that works exactly like a regular AMD64 one was the big dream (really getting to me in 2019), and the MACCHIATObin mini-ITX board running Marvell’s networking-targeted Armada8k SoC seemed very promising. The firmware situation was amazing, with upstream TianoCore EDK2 support and nearly everything in the early boot process being open source (including memory training). The board was also convenient for firmware development, being able to load firmware from microSD, onboard SPI flash, and over the UART. And in fact, I have seen reports of people running GPUs on it! After confirming that its PCIe controller did work as a generic ECAM one, I have ordered the board. When I got it delivered, I first replaced the stock U-Boot firmware with a preexisting EDK2 UEFI build and started trying to run the OS in ACPI mode.

Continuing the UART/SPCR theme… This time FreeBSD didn’t like BaudRate being zero, which I fixed in D19914.

Because console on Unix systems is complicated, I also needed to add an ACPI entry to recognize the Marvell UART to make the userspace console work (D20765).

On the console, I could see that AHCI SATA just worked, and so did XHCI USB because of my previous work.

Now, PCIe is of course the fun part. I’ve started plugging in various cards and… only a basic SATA adapter was seen by the OS. Turns out this is due to a firmware workaround for a hilarious hardware bug. Basically whatever version of the Synopsys DesignWare PCIe controller used on this SoC just does not do the expected packet filtering, some devices show up multiplied into all 32 slots on bus 0. Amazing. And it is some devices because others do their own filtering. The chosen workaround in the firmware was to shift the ECAM base address so that the OS would only see the last slot. But this obviously only works with the devices-that-are-multiplied. Unfortunately, there’s no good way to add a quirk for this in the ACPI world since Linux maintainers have a very strong “fix your hardware” stance on that. So we just have to use a modified firmware that does not do the ECAM shift to use any device that does not get multiplied.

With that out of the way, devices started working!

At first with some funky errors like igb0: failed to allocate 5 MSI-X vectors, err: 3, but that was easy to figure out: someone forgot to add an attach function to the MSI support “device” for Arm’s GICv2 interrupt controller, which I fixed in D20775.

With that patch, an Intel network card worked great.

So did a Mellanox one, as I’ve already enabled that driver on arm64 for the Packet server.

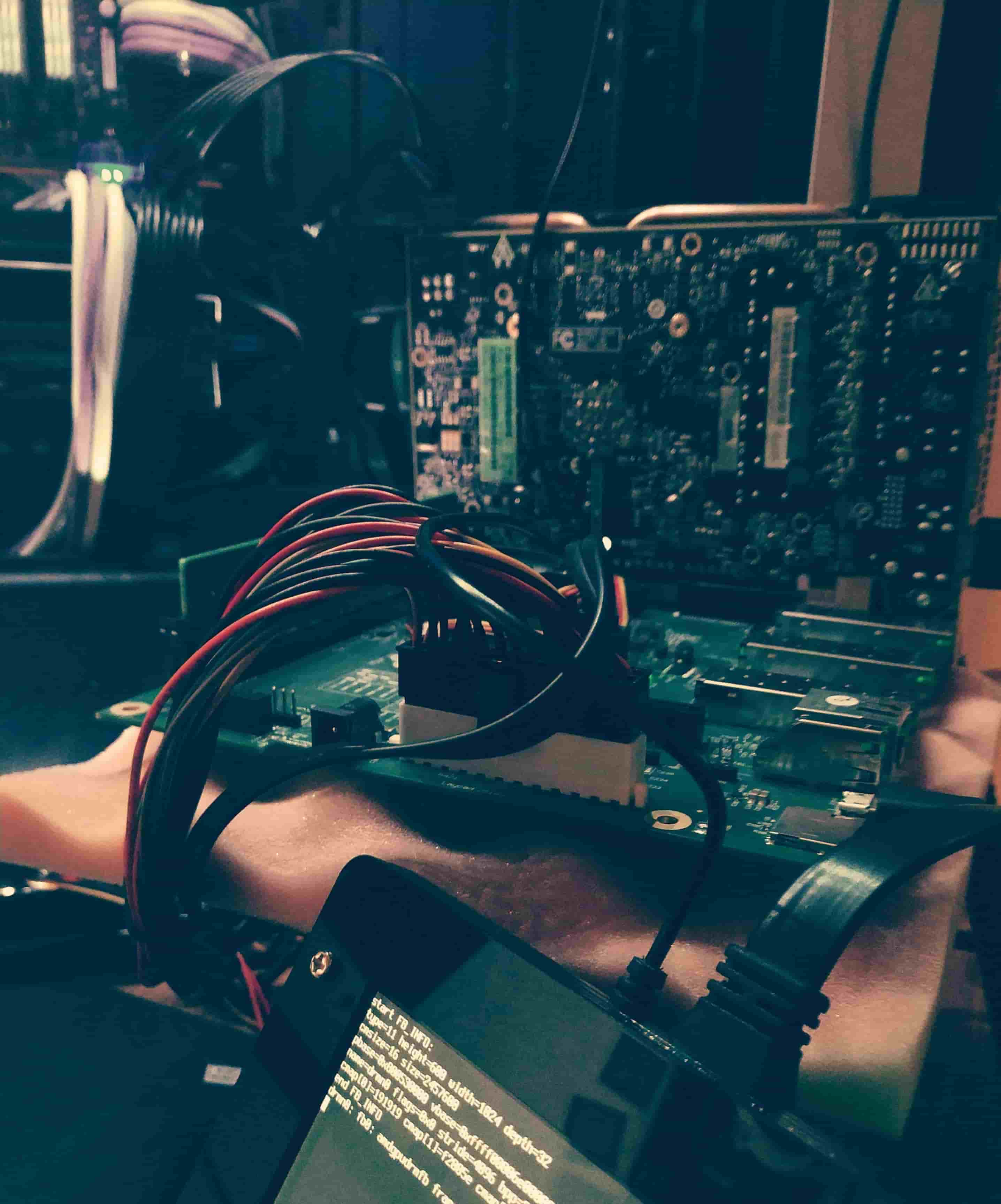

Then it was GPU time with a Radeon RX 480 that used to be in my main desktop system. After adding the aarch64 build fixes, building and loading, I was first met with a system hang during initialization. All I’ve had to figure out was that it was related to not actually mapping the PCIe memory as “device memory”. After fixing it in D20789, the GPU was fully initialized, showing the console! Initially there was some display corruption, which had to be fixed by applying an existing Linux patch. Then I’ve enabled MSI to avoid the same legacy interrupt problem that was slowing the Scaleway machine down.

And there it was! (dmesg) Later I’ve actually bought a case and assembled the system properly, adding a bunch of USB devices for missing parts like audio and networking. And this was a completely usable desktop. It even actually supported early boot display output, allowing for boot device selection and such, because the firmware runs the amd64 (!) EFI display driver stored on the card through QEMU. Well. Actually, that initially didn’t work on my RX 480 for some reason, though it did on another GPU I tried. It took an email exchange with AMD employees to realize that the problem was that I have modified the VBIOS on my card previously for Overclocking Reasons. And the EFI GOP driver does check the signature on that, unlike the Linux one.

While working on that machine as an actual desktop, I’ve contributed a few more general Arm things, such as improving the armv8crypto driver:

fixed a locking bug (D21012) found by enabling tests (D21018) that I wanted to have for adding the XTS mode (D21017).

With XTS support, that driver became actually useful for something (that is, full disk encryption).

Another cryptography-related thing was adding support for getting entropy from UEFI in the boot loader (D20780), since it was supported on this machine’s firmware among others, while standardized armv8.x hardware RNG instructions were quite far away, and this was a quick way to get some of that hardware entropy already into the system without writing a device-specific RNG driver.

I have also ported the sbsawdt generic watchdog driver from NetBSD (D20974) however the review process has stalled so it hasn’t landed.

SolidRun Honeycomb LX2K (NXP Layerscape LX2160A)

The next mini-ITX board from SolidRun — also based on a networking SoC (but now a 16-core one!) — made a much bigger splash as it was explicitly marketed as a workstation. It worked well on Linux. I have not actually bought one, as it was a bit too expensive for my “science budget”. Also too expensive to be imported without dealing with customs. So it was up to other people to try FreeBSD on it, with me around to fix the kernel :) And one such person eventually turned up on the mailing lists. (Hi Dan!)

This platform has uncovered yet more FreeBSD bugs. Adding support for multiple interrupt resources per ACPI device (D25145) was required to make AHCI SATA work. We also added a quirk for that devices (D25157). And for PCIe, interrupts did not work until I figured out (through long collaborative remote debugging sessions) that the IORT table handling code contained an off-by-one error in the range calculations. The fix was just removing a “minus one” (D25179).

With PCIe running, it was time to get a graphics card in there.

And initially it would return a very strange error while executing the “ATOMBIOS” interpreter that (big hint here) runs bytecode that it just read over PCIe from the card.

One more exhausting collaborative debugging session later, we figured out what the problem actually was!

On this platform, an offset is required to translate PCIe addresses into raw memory ones.

Because drivers ported from Linux deal with raw addresses, a BUS_TRANSLATE_RESOURCE method on the PCIe driver interface was introduced to deal with this on another platform first (PowerPC).

However when the pci_host_generic driver that’s used on Arm was written, that kind of translation was only implemented internally and was not exposed via that method.

Instead of reading from the GPU, the driver was reading some unrelated memory location.

To fix that, I’ve landed D30986 and it became a usable desktop!

As an extra, I have also tried adding ACPI attachment for the I2C controller (D24917) but it was too difficult to debug something like that remotely.

These days, this platform is really well supported: someone even landed a networking driver.

Raspberry Pi 4 ACPI Mode

When the ServerReady compatible firmware (TianoCore EDK2 with ACPI tables) was published, I bought a Pi 4 to play with a new platform for FreeBSD to run on.

The Pi did boot FreeBSD quite easily, but XHCI USB (which they expose in an interesting way — hiding the whole PCIe layer from the OS which is nice) did not work out of the box.

Namely the device just wasn’t getting initialized.

After tracing what was going on in ACPI and seeing that the memory accesses the _INI method was doing were… weird, I realized that

the memory regions used by ACPI weren’t being mapped as device memory.

I have solved the problem in patch D25201 by using the UEFI memory map to figure the mode for each region mapped by ACPI.

Then I’ve added ACPI bindings for the DWC OTG USB driver (D25203), used on the Pi 4 for the USB-C port.

Now, the most interesting platform quirk on the Pi 4 is that due to weird Broadcom bugs, the XHCI USB controller can only DMA to the first 3GB of memory.

Due to that, by default the firmware limits the memory to 3GB.

My attempt at supporting fully unlocked memory mode was D25219 which interpreted ACPI _DMA objects as FreeBSD bus_dma_tags.

Apparently it didn’t fully solve all problems but it seemed to work fine for me.

Experiments with application sandboxing

FreeBSD’s Capsicum sandboxing is a brilliant invention. Turning POSIX into a strict capability-based system just like that is amazing. Clearly I’m not the only one obsessed with this idea, as Capsicum has led to CloudABI and ultimately WASI.

Now of course, Capsicum was originally designed for applications to sandbox themselves, and take advantage of the openat-style system calls extensively.

There is good work in the direction of popularizing this approach among developers, such as the cap-std Rust crate.

However, it is still quite rare to encounter applications designed in a capability-friendly way.

What do we do about all the existing software? Can’t we just, like, trick them into working in that sandbox?

Yes, we can: at least we can just convert non-relative, global path based open-style calls

into openat-family ones below pre-opened descriptors, based on prefix matching.

This is what the libpreopen LD_PRELOAD library does.

My first experiment was building the capsicumizer, an easy launcher for libpreopen-ifying an application.

I have had to contribute extra wrappers to libpreopen itself, and in the end I’ve been able to even use GUI apps like gedit in the sandbox.

I have also experimented with implementing a libpreopen-like wrapper inside Firefox for

Firefox’s content process self-sandboxing.

However the LD_PRELOAD approach has its limitations.

Not just the fact that it doesn’t work with programs that use raw syscalls (like anything written in Go),

but also it was really hard to cover the entire POSIX surface in those wrappers.

If only we could hook one central location in the code that’s responsible for all the path lookups, right?

Well, that is located in the kernel, in the namei function.

In my kpreopen experiment

I have implemented the preopened path lookup right in the kernel, and it was a lot cleaner.

However it was flawed: the illusion of a filesystem that is being constructed by these lookup hooks actually falls apart quite easily

if the application itself starts using directory descriptors and openat style calls.

Now I have realized that the way to avoid that flaw is to make it simpler: no path prefixes in the kernel, allow just one single file descriptor

that would be used as the replacement for AT_FDCWD (the global VFS namespace).

That would push the construction of a sandbox FS out to userspace, where a sequence of mounts (nullfs bind mounts, FUSE, etc.) could be used

to make an actual FS tree which wouldn’t have any of the problems a flimsy fake one would.

I haven’t had the time to implement that yet, but this would definitely be far more upstreamable than my original experiment.